I still wanted to group similar images together first – after all, how many pictures of the same bird in the same pose do you need for a training set? And wouldn’t it be easier to remove all the images with no birds first?

ChatGPT recommended using pHash (perceptual Hash) and SSIM (structural similarity index measure) for image grouping. I had no idea what these were – it wouldn’t be vibe-coding if I knew what I was doing. It wasn’t long before I was pulling my hair out in frustration. Just like the free software I’d used earlier, it grouped nearly all the photos into one folder 🤔. I tried tweaking a few parameters to change the sensitivity, but no luck. This wasn’t going to work on vibes alone – I was going to have to read up on what I was doing.

A glance at the Wikipedia pages for pHash and SSIM gave me some insight. When I said I wanted to group similar images, I meant photos of the same bird in the same spot. However, that’s not what “similar” means to a computer. The algorithm we* were using grouped images based on high-frequency components. In other words, it prioritized edges (especially those that occupied a larger fraction of pixels) while ignoring low-frequency details like a birds’ color, size or beak-shape. No wonder then, that it dumped all the birdbath photos into a single folder.

Realizing my blunder, I pitched its own idea back to ChatGPT: could the program detect where the bird was in an image, and then let me provide a label so that it could be sorted into the correct folder? Turns out, yes.

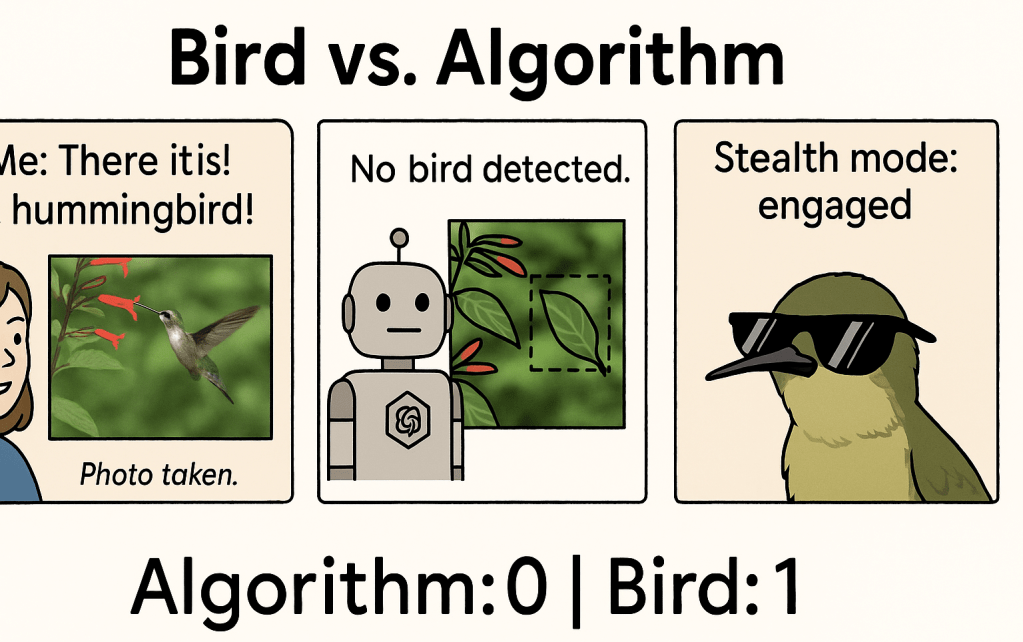

ChatGPT wrote up some code using YOLOv8n, a convolutional neural network pre-trained to detect birds. It would crop the detected bird, prompt me for a label, and then save the cropped image in the appropriate folder. It worked fairly well, detecting about a third of the birds in the images I provided, but struggled in some specific cases.

For example, it just couldn’t find hummingbirds.

I guessed that it was struggling to see the green of the hummingbird against the green of the leaves. ChatGPT added some image processing: boosting contrast, applying a color space transformation and edge enhancement. This brought detection closer to 50%, but it also increased false positives. And still no luck with the hummingbirds. My new hunch was that the pre-trained model didn’t see hummingbirds because they didn’t have the typical bird “shape”. I would need to improve the detector to get better results, which meant creating a labeled training set was now even more important.

At the time, I still hadn’t fully separated detection and classification into two separate steps in my head. So I forged ahead manually, labeling birds in about 500 images… before I realized that I’d have to create a completely different training dataset for a custom model for bird detection. D’oh!

*Again, I know ChatGPT isn’t a person. It just feels weird taking 100% of the credit for this thing when it did so much of the heavy lifting.

Previous post: Using ChatGPT to come up with a plan

Next post: Custom detection and classification

Leave a comment